Note

This page was generated from tutorials/finance/10_qgan_option_pricing.ipynb.

Run interactively in the IBM Quantum lab.

Option Pricing with qGANs¶

Introduction¶

In this notebook, we discuss how a Quantum Machine Learning Algorithm, namely a quantum Generative Adversarial Network (qGAN), can facilitate the pricing of a European call option. More specifically, a qGAN can be trained such that a quantum circuit models the spot price of an asset underlying a European call option. The resulting model can then be integrated into a Quantum Amplitude Estimation based algorithm to evaluate the expected payoff - see European Call Option Pricing. For further details on learning and loading random distributions by training a qGAN please refer to Quantum Generative Adversarial Networks for Learning and Loading Random Distributions. Zoufal, Lucchi, Woerner. 2019.

[1]:

import matplotlib.pyplot as plt

import numpy as np

from qiskit import Aer, QuantumRegister, QuantumCircuit

from qiskit.circuit import ParameterVector

from qiskit.circuit.library import TwoLocal, NormalDistribution

from qiskit.quantum_info import Statevector

from qiskit.aqua import aqua_globals, QuantumInstance

from qiskit.aqua.algorithms import IterativeAmplitudeEstimation

from qiskit.finance.applications import EuropeanCallExpectedValue

Uncertainty Model¶

The Black-Scholes model assumes that the spot price at maturity \(S_T\) for a European call option is log-normally distributed. Thus, we can train a qGAN on samples from a log-normal distribution and use the result as an uncertainty model underlying the option. In the following, we construct a quantum circuit that loads the uncertainty model. The circuit output reads

where the probabilities \(p_{\theta}^{j}\), for \(j\in \left\{0, \ldots, {2^n-1} \right\}\), represent a model of the target distribution.

[2]:

# Set upper and lower data values

bounds = np.array([0.,7.])

# Set number of qubits used in the uncertainty model

num_qubits = 3

# Load the trained circuit parameters

g_params = [0.29399714, 0.38853322, 0.9557694, 0.07245791, 6.02626428, 0.13537225]

# Set an initial state for the generator circuit

init_dist = NormalDistribution(num_qubits, mu=1., sigma=1., bounds=bounds)

# construct the variational form

var_form = TwoLocal(num_qubits, 'ry', 'cz', entanglement='circular', reps=1)

# keep a list of the parameters so we can associate them to the list of numerical values

# (otherwise we need a dictionary)

theta = var_form.ordered_parameters

# compose the generator circuit, this is the circuit loading the uncertainty model

g_circuit = init_dist.compose(var_form)

Evaluate Expected Payoff¶

Now, the trained uncertainty model can be used to evaluate the expectation value of the option’s payoff function with Quantum Amplitude Estimation.

[3]:

# set the strike price (should be within the low and the high value of the uncertainty)

strike_price = 2

# set the approximation scaling for the payoff function

c_approx = 0.25

# construct circuit for payoff function

european_call_objective = EuropeanCallExpectedValue(

num_qubits,

strike_price=strike_price,

rescaling_factor=c_approx,

bounds=bounds

)

Plot the probability distribution¶

Next, we plot the trained probability distribution and, for reasons of comparison, also the target probability distribution.

[4]:

# Evaluate trained probability distribution

values = [bounds[0] + (bounds[1] - bounds[0]) * x / (2 ** num_qubits - 1) for x in range(2**num_qubits)]

uncertainty_model = g_circuit.assign_parameters(dict(zip(theta, g_params)))

amplitudes = Statevector.from_instruction(uncertainty_model).data

x = np.array(values)

y = np.abs(amplitudes) ** 2

# Sample from target probability distribution

N = 100000

log_normal = np.random.lognormal(mean=1, sigma=1, size=N)

log_normal = np.round(log_normal)

log_normal = log_normal[log_normal <= 7]

log_normal_samples = []

for i in range(8):

log_normal_samples += [np.sum(log_normal==i)]

log_normal_samples = np.array(log_normal_samples / sum(log_normal_samples))

# Plot distributions

plt.bar(x, y, width=0.2, label='trained distribution', color='royalblue')

plt.xticks(x, size=15, rotation=90)

plt.yticks(size=15)

plt.grid()

plt.xlabel('Spot Price at Maturity $S_T$ (\$)', size=15)

plt.ylabel('Probability ($\%$)', size=15)

plt.plot(log_normal_samples,'-o', color ='deepskyblue', label='target distribution', linewidth=4, markersize=12)

plt.legend(loc='best')

plt.show()

Evaluate Expected Payoff¶

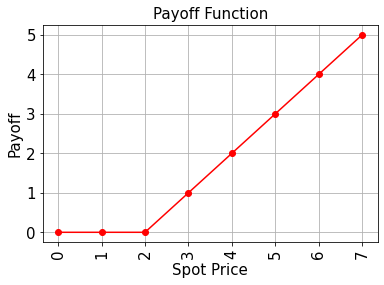

Now, the trained uncertainty model can be used to evaluate the expectation value of the option’s payoff function analytically and with Quantum Amplitude Estimation.

[5]:

# Evaluate payoff for different distributions

payoff = np.array([0,0,0,1,2,3,4,5])

ep = np.dot(log_normal_samples, payoff)

print("Analytically calculated expected payoff w.r.t. the target distribution: %.4f" % ep)

ep_trained = np.dot(y, payoff)

print("Analytically calculated expected payoff w.r.t. the trained distribution: %.4f" % ep_trained)

# Plot exact payoff function (evaluated on the grid of the trained uncertainty model)

x = np.array(values)

y_strike = np.maximum(0, x - strike_price)

plt.plot(x, y_strike, 'ro-')

plt.grid()

plt.title('Payoff Function', size=15)

plt.xlabel('Spot Price', size=15)

plt.ylabel('Payoff', size=15)

plt.xticks(x, size=15, rotation=90)

plt.yticks(size=15)

plt.show()

Analytically calculated expected payoff w.r.t. the target distribution: 1.0667

Analytically calculated expected payoff w.r.t. the trained distribution: 0.9805

[6]:

# construct A operator for QAE

european_call = european_call_objective.compose(uncertainty_model, front=True)

[7]:

# set target precision and confidence level

epsilon = 0.01

alpha = 0.05

# construct amplitude estimation

ae = IterativeAmplitudeEstimation(epsilon=epsilon, alpha=alpha,

state_preparation=european_call,

objective_qubits=[num_qubits],

post_processing=european_call_objective.post_processing)

[8]:

result = ae.run(quantum_instance=Aer.get_backend('qasm_simulator'), shots=100)

[9]:

conf_int = np.array(result['confidence_interval'])

print('Exact value: \t%.4f' % ep_trained)

print('Estimated value: \t%.4f' % (result['estimation']))

print('Confidence interval:\t[%.4f, %.4f]' % tuple(conf_int))

Exact value: 0.9805

Estimated value: 1.0255

Confidence interval: [0.9932, 1.0578]

[10]:

import qiskit.tools.jupyter

%qiskit_version_table

%qiskit_copyright

Version Information

| Qiskit Software | Version |

|---|---|

| Qiskit | 0.24.1 |

| Terra | 0.16.4 |

| Aer | 0.7.6 |

| Ignis | 0.5.2 |

| Aqua | 0.8.2 |

| IBM Q Provider | 0.12.2 |

| System information | |

| Python | 3.7.7 (default, Apr 22 2020, 19:15:10) [GCC 9.3.0] |

| OS | Linux |

| CPUs | 32 |

| Memory (Gb) | 125.71903228759766 |

| Tue May 25 15:17:58 2021 EDT | |

This code is a part of Qiskit

© Copyright IBM 2017, 2021.

This code is licensed under the Apache License, Version 2.0. You may

obtain a copy of this license in the LICENSE.txt file in the root directory

of this source tree or at http://www.apache.org/licenses/LICENSE-2.0.

Any modifications or derivative works of this code must retain this

copyright notice, and modified files need to carry a notice indicating

that they have been altered from the originals.